AI Partnership

Microsoft, NVIDIA and Anthropic announce strategic partnerships

Anthropic is making a huge bet on Microsoft’s Azure cloud — it has committed to buy $30 billion of compute capacity. On top of that, it’s securing up to 1 gigawatt of powerful NVIDIA compute using Grace Blackwell and Vera Rubin systems.

Massive Compute Deal

Anthropic will buy a very large amount of power from Azure over many years.

This includes access to strong NVIDIA systems that can run heavy AI jobs at high speed.

This move is meant to support fast growth of Claude models and future versions.

Joint Work on Future AI Systems

NVIDIA and Anthropic will work together on new hardware ideas.

Anthropic will tune its models so they run better on NVIDIA chips.

NVIDIA will design future systems using what Anthropic needs for large models.

Claude Models Expand on Azure

Microsoft will bring many Claude models into Azure AI Foundry.

These models will also stay inside GitHub Copilot, Microsoft 365, and Copilot Studio.

Teams that use Microsoft tools will get more options for text, coding, and workflow tasks.

Major Funding from All Sides

NVIDIA plans to invest a large sum in Anthropic.

Microsoft will also invest to help support new model training and cloud growth.

This adds long-term support and steady resources for Claude development.

Claude Goes Multi-Cloud

Claude models will run on Azure, AWS, and Google Cloud.

This gives companies more freedom to choose where they want to run advanced AI tasks.

This deal sets the stage for faster AI progress and stronger competition in cloud compute.

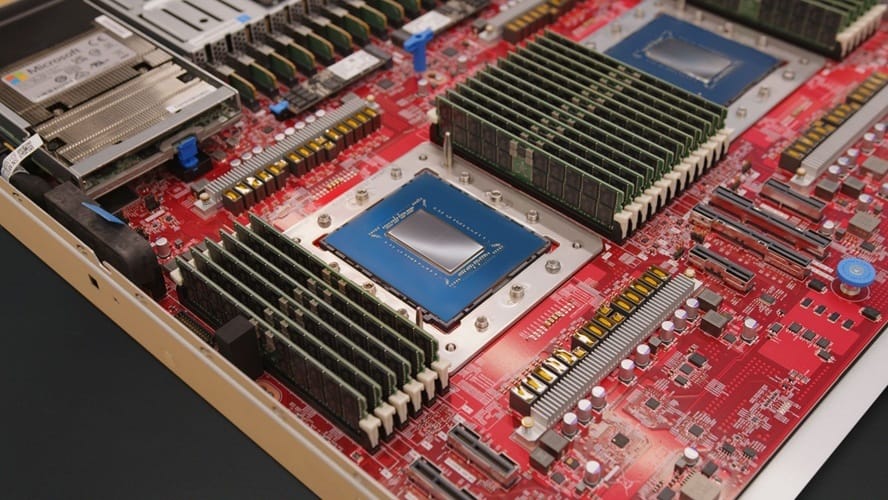

AI Hardware

Announcing Cobalt 200: Azure’s next cloud-native CPU

Microsoft has revealed its next-generation ARM-based chip called Cobalt 200, built specifically for cloud workloads.

It promises up to 50% better performance than its predecessor, Cobalt 100, while still being very energy efficient.

What Makes Cobalt 200 Powerful

Cobalt 200 has 132 active cores, built on ARM’s Neoverse CSS V3.

Each core can run at its own speed (thanks to per-core voltage/frequency scaling), which helps save power based on what your workload needs.

Microsoft also made special on-chip hardware for common tasks like compressing data and encryption, so those tasks don’t drag down performance.

Security Built In

Memory encryption is enabled by default.

There is support for ARM’s Confidential Compute Architecture, which helps isolate virtual machines from the host system.

Microsoft also built in a hardware security module (HSM) that works with Azure Key Vault to protect encryption keys.

Smarter Design from Real-World Data

The team didn’t just rely on standard benchmarks. They collected data from how real Azure workloads run — databases, web servers, analytics — and used over 140 variants of those patterns to guide the design.

They simulated 350,000 different configurations to find the sweet spot for performance and power use.

Better Infrastructure Integration

Cobalt 200 works closely with other parts of Azure’s infrastructure.

For instance, they use Azure Boost, which shifts networking and storage tasks to custom hardware — freeing up the CPU cores.

That means faster network traffic and lower delays when your app needs remote storage.

Cobalt 200 shows Microsoft doubling down on custom chips — aiming for higher cloud performance, better efficiency, and stronger security.

📺️ Podcast

Who Really Pays When the Cloud Fails? Inside the October 2025 AWS Outage

The AWS outage in October 2025 has reignited concerns about the financial impact of cloud service disruptions and intensified debate over who carries the burden of resulting losses.

As businesses grappled with widespread downtime, lost sales, and customer dissatisfaction, the spotlight turned to liability and recovery.

Most standard service level agreements (SLAs) with leading cloud providers like AWS sharply limit liability, offering only service credits—rather than cash—to compensate for outages.

These credits typically fall far short of covering actual losses or reputational damage.

In the aftermath, many organizations are closely examining their contracts and discovering they bear most responsibility for indirect or consequential losses.

While cyber insurance or business interruption policies can provide some relief, these are not comprehensive solutions.

Only a minority of large enterprises manage to negotiate customized terms that include more favorable compensation or financial remedies, and even these often exclude force majeure events such as natural disasters.

Cloud Region

Oracle launches second cloud region in Italy

Oracle is launching a second public cloud region in Italy — this one is in Turin.

It’s part of Oracle’s effort to give more local access to AI and cloud services.

Why It Matters

This new region will let Italian companies — from startups to government — run their work in Oracle Cloud Infrastructure (OCI) while keeping data inside Italy.

That helps especially when data rules are strict, and businesses need both speed and control.

Oracle plans for a wide set of services here: generative AI, data analytics, and more — all on OCI.

Local Buy‑In

The region is being built in partnership with Telecom Italia (TIM), using its data center in Turin.

Oracle already has a cloud region in Milan, so this expands its reach in Italy.

Oracle is growing cloud infrastructure in Italy to help local businesses access powerful AI tools under tighter data control.

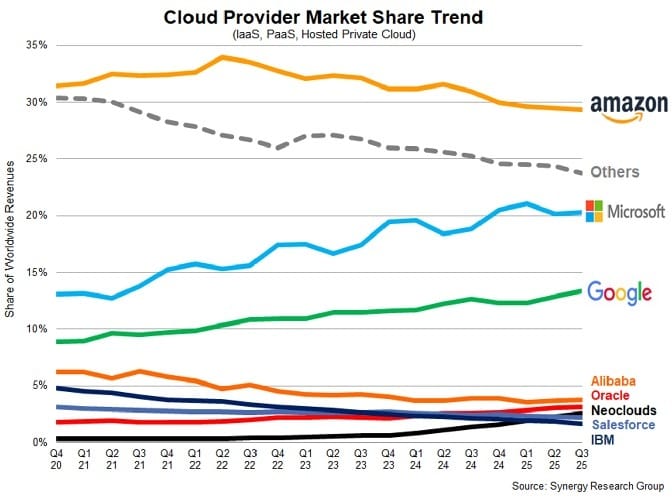

Trends

Neoclouds gradually increasing CIS market share, as Amazon declines

Neoclouds — smaller, focused cloud providers — are slowly taking market share from Amazon.

Synergy Research shows a 2-point drop in Amazon’s global cloud share over the past year.

Key Players Driving Growth

Companies like CoreWeave, Crusoe, Lambda, and Nebius are expanding, especially in AI and GPU services.

CoreWeave is now close to entering the top ten cloud providers.

Revenue Growth

Neoclouds grew revenue to $5 billion in Q2 2025, up more than 200% from the previous year.

While growth is fast, they still trail the big three: Amazon, Microsoft, and Google.

Impact for Cloud Users

Neoclouds give businesses more options, especially for AI workloads.

They can offer faster access to GPUs and more specialized services.

The rise of neoclouds shows that even smaller players can shift the cloud landscape and challenge the largest providers.

Cloud Outage

Cloudflare outage on November 18, 2025

On November 18, 2025, Cloudflare’s network started failing around 11:20 UTC, causing many websites to show error pages.

This was not an attack. The problem came from an internal bot‑management system. A configuration file used to detect bots grew too large after a change in database permissions.

What Broke

The issue caused a lot of HTTP 500 (“server error”) responses across Cloudflare’s network.

Some parts of their service were affected more:

Workers KV storage saw a spike in errors.

Turnstile (the CAPTCHA/login widget) stopped working properly.

The Cloudflare Dashboard was reach‑only at points, because login was broken.

Email security was partly impacted — some spam‑detection features were less accurate.

Why It Happened

Cloudflare’s “feature file” — a list of traits used by its bot‑management AI — was rebuilt every few minutes. After a permissions change, a query returned duplicate data.

That made the file much bigger than usual, exceeding the system’s limit. The system couldn’t handle it and crashed.

To fix it, Cloudflare stopped distributing the bad file, reverted to a previous version, and restarted traffic‑routing systems.

Why This Matters

Cloudflare says this was its worst outage since 2019.

For many businesses, this outage was a reminder: even foundational internet services can fail, and internal bugs can ripple widely.

Cloudflare has apologized and pledged to build stronger protections, so this kind of failure does not happen again.

Want your brand in this Newsletter? Click here